In today’s digital landscape, user experience (UX) has become the cornerstone of online success. A website may have great content, strong branding, and advanced functionality, but if users find it difficult to navigate, conversion rates will suffer. This is where A/B testing for better UX comes into play. By systematically testing different versions of your website elements, you can determine what resonates most with users and optimize accordingly. This guide will walk you through the fundamentals, benefits, tools, and practical tips to make A/B testing a reliable part of your UX strategy.

What is A/B Testing in UX?

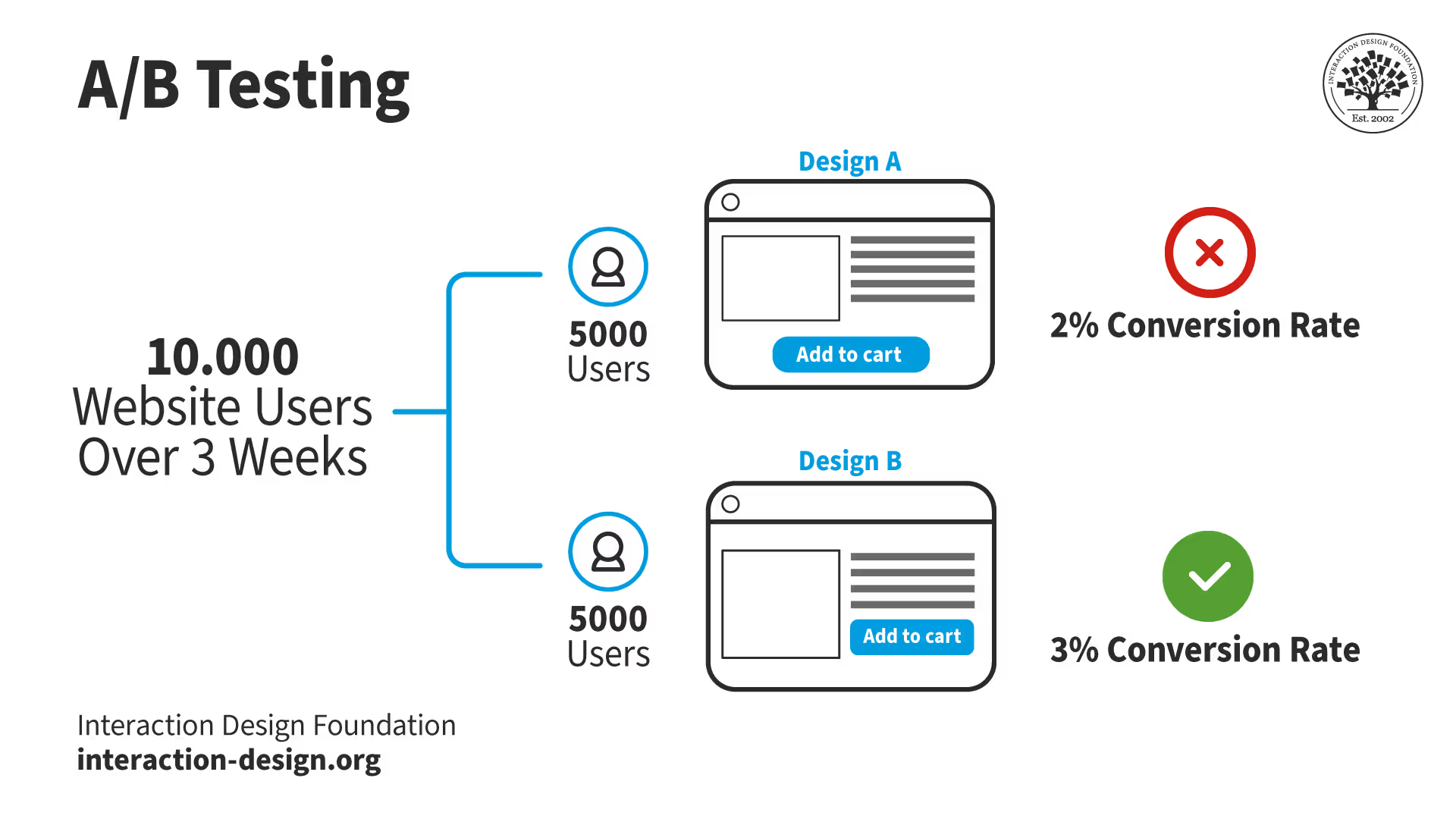

A/B testing, also known as split testing, is a method of comparing two versions of a web page, app interface, or design element to see which performs better. For example, you might test two different headlines, button colors, or call-to-action placements. The key is to measure user engagement and behavior to make data-driven improvements. When applied strategically, A/B testing ensures that design decisions are not based on assumptions but on real user feedback.

Why A/B Testing Matters for UX

User experience design focuses on usability, accessibility, and satisfaction. Without testing, businesses often rely on guesswork, which can lead to wasted resources and poor results. Some of the main reasons to use A/B testing for better UX include:

- Increased Conversions: Simple design tweaks can boost sign-ups, sales, and engagement.

- Improved Navigation: Testing menu structures or layouts can make sites more intuitive.

- Reduced Bounce Rates: Identifying friction points helps retain visitors longer.

- Enhanced Customer Satisfaction: When users find a website easier to use, loyalty improves.

In short, A/B testing for better UX bridges the gap between user expectations and business goals.

Key Elements to Test for UX Improvement

Not every element of a website has the same impact on user experience. To maximize results, focus on areas where small changes can lead to significant improvements. Here are some elements to prioritize when running A/B testing for better UX:

- Headlines and Copywriting: Different tones or wording can influence engagement.

- CTA Buttons: Placement, color, and text can affect click-through rates.

- Page Layouts: Minimalist vs. content-heavy designs impact user flow.

- Forms: Length, required fields, and design can make or break conversions.

- Images and Videos: Media type and positioning influence trust and attention.

- Navigation Menus: Testing simplified vs. detailed menus helps refine usability.

The A/B Testing Process Step by Step

Running successful A/B tests requires a structured process. Here’s how to approach A/B testing :

- Identify Goals: Define what you want to improve, such as sign-ups or lower bounce rates.

- Form Hypotheses: Predict how a change might impact user behavior.

- Create Variants: Design two or more versions of the chosen element.

- Run the Test: Randomly divide traffic between versions to collect unbiased data.

- Analyze Results: Measure engagement, conversions, and time on page.

- Implement Changes: Apply the winning variation and continue iterating.

By following this cycle, A/B testing for better UX becomes an ongoing improvement strategy rather than a one-time effort.

Tools for A/B Testing

To streamline the process, marketers and designers rely on A/B testing tools that provide accurate data and insights. Some of the most popular platforms include:

- Google Optimize – Free tool with integration into Google Analytics.

- Optimizely – Advanced testing with personalization features.

- VWO (Visual Website Optimizer) – Great for multivariate testing and heatmaps.

- Adobe Target – Enterprise solution for large-scale experimentation.

These tools simplify the execution of A/B testing for better UX by handling traffic distribution, metrics tracking, and result analysis.

Best Practices for A/B Testing Success

Simply running a test is not enough—you need to follow best practices to ensure meaningful results. Here are some tips to make A/B testing for better UX effective:

- Test One Variable at a Time: Avoid confusion by isolating specific elements.

- Run Tests for a Sufficient Duration: Ensure enough data is collected to avoid bias.

- Segment Your Audience: User behavior can vary by device, location, or demographics.

- Avoid Testing During Unusual Events: Holidays or promotions can skew results.

- Document Learnings: Record test outcomes to build a knowledge base for future experiments.

Real-World Examples of A/B Testing for UX

To highlight the effectiveness of A/B testing for better UX, let’s look at real-world examples:

- Case Study 1: Button Color Change – An eCommerce site tested a red CTA button versus a green one. The red button improved click-through rates by 20%.

- Case Study 2: Simplified Checkout – A brand reduced its checkout form from 10 fields to 5. Conversion rates jumped by 35%.

- Case Study 3: Headline Variation – A SaaS company tested benefit-driven copy versus feature-driven copy. The benefit-driven headline attracted 15% more sign-ups.

These examples show that small tweaks identified through A/B testing for better UX can produce measurable business impact.

Challenges and Limitations of A/B Testing

While powerful, A/B testing comes with challenges. Some common issues include:

- Insufficient Traffic: Low traffic sites may not generate reliable results.

- Overtesting: Running too many experiments simultaneously can dilute findings.

- Misinterpreted Data: Without proper analysis, results may be misleading.

- Resource Constraints: Designing, running, and analyzing tests requires time and expertise.

Despite these challenges, when executed carefully, A/B testing for better UX remains one of the most effective strategies for continuous improvement.

Future of A/B Testing in UX

As digital experiences evolve, A/B testing for better UX will become more sophisticated. AI and machine learning are already being integrated to automate experimentation and personalize experiences at scale. Instead of static A/B tests, businesses will adopt adaptive testing that adjusts in real-time based on user behavior. This shift will make experimentation faster, more accurate, and more user-centric.

Conclusion

In the competitive digital environment, user experience can make or break a business. Rather than relying on assumptions, A/B testing for better UX provides a scientific approach to understanding what users prefer. By testing design elements, analyzing results, and applying winning variations, businesses can boost engagement, increase conversions, and deliver seamless experiences. As technology evolves, A/B testing will continue to be a vital tool in creating websites and applications that truly meet user needs.